Introducing: Green Thumb Guide

Two interests defined 2023 for me: large language models, and plants. So I thought, why not combine them?

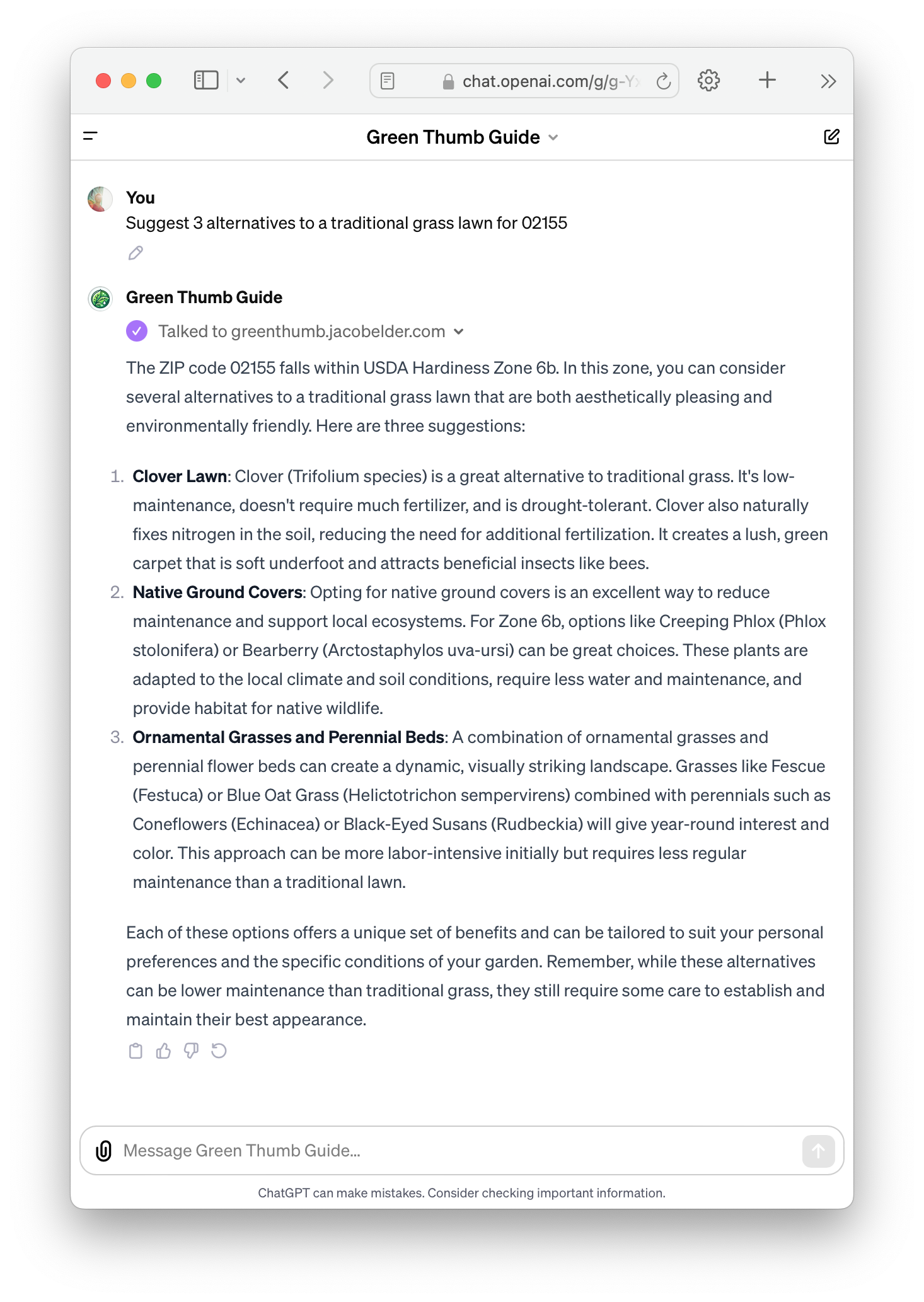

Green Thumb Guide is a chatbot intended to help newbies like me to not kill their houseplants, and help their garden thrive. It has a knowledgebase of articles I’ve found useful, and it also understands the climates that outdoor plants can tolerate.

The first version “hard coded” my hardiness zone as 6a. The USDA Plant Hardiness Zone Map is the standard by which gardeners and growers can determine which perennial plants are most likely to thrive at a location. The map is based on the average annual extreme minimum winter temperature, displayed as 10-degree F zones and 5-degree F half zones.

That information is now dynamic. Mention what ZIP code you live in and it will tailor its answers to your USDA Hardiness Region.

It’s also capable of identifying species from images and can tell you how and where to prune them.

Try it out yourself! (requires ChatGPT account)

Implementation details

The USDA publishes hardiness zone data in a few different formats, but none that I could use for this project. Fortunately, Oregon State University was kind enough to generate some more useful formats: shape files, grid files, and the ZIP code data in CSV format. It’s highly denormalized.

Fun fact about ZIP codes: they don’t describe a contiguous region on a map; rather, they describe a path through a region. And nature doesn’t care about that path, so I’m sure there are ZIP codes where certain areas see colder temperatures than the Hardiness Zone data suggests. The regions that ZIP codes traverse are also (usually) quite small, so the differences shouldn’t matter too much.

| zipcode | zone | trange | zonetitle |

|---|---|---|---|

| 00544 | 7b | 5 to 10 | 7b: 5 to 10 |

| 01001 | 6b | -5 to 0 | 6b: -5 to 0 |

I loaded that into a sqlite3 database, and re-normalized it into a simple pair of tables:

CREATE TABLE zone_zipcodes (

zipcode text primary key,

zone_id text not null references zones(id)

);

CREATE INDEX idx_zone_zipcodes_zone_id ON zone_zipcodes (zone_id);

CREATE TABLE zones (

id text primary key not null,

min_temp_f real not null

);

The backend is in Rust. The most popular way to build a server in Rust is Axum. My first impression of Axum is that it’s kind of amazing what an elegant API they built, and not a single procedural macro in sight.

The Hardiness Zone lookup is using sqlx, IMO the best way to query a database that exists. Compile-time-checked SQL queries! Overkill for this project but it’s mine and I don’t care.

async fn lookup_by_zipcode(

Query(params): Query<QueryParams>,

Extension(pool): Extension<SqlitePool>,

) -> Response {

let result = sqlx::query!(

"

select zones.*

from zones

join zone_zipcodes on zone_id = zones.id

where zipcode = $1

",

params.q

)

.fetch_optional(&pool)

.await;

match result {

Ok(Some(hardiness_zone)) => Json(ZipcodeLookupResult {

zone: hardiness_zone.id.to_string(),

min_temp_f: hardiness_zone.min_temp_f,

min_temp_c: fahrenheit_to_celsius(hardiness_zone.min_temp_f),

})

.into_response(),

Err(err) => (

StatusCode::INTERNAL_SERVER_ERROR,

simple_page("Internal Server Error", err.to_string().as_str()),

)

.into_response(),

_ => (

StatusCode::NOT_FOUND,

simple_page("Not Found", "The requested ZIP code could not be found."),

)

.into_response(),

}

}

The HTML is rendered at compile time with Maud.

fn simple_page(title: &str, p: &str) -> Markup {

html! {

head { link rel="stylesheet" href="https://edwardtufte.github.io/tufte-css/tufte.css"; }

body {

h1 { (title) }

p { (p) }

}

}

}

OpenAI requires public GPTs have a privacy policy, so here’s Green Thumb Guide’s Privacy Policy: not applicable. ZIP codes are not considered PII and I don’t log them anyway. That page is another route in the same Axum app, which all together looks like this:

let app = Router::new()

.route("/hardiness_zone", get(lookup_by_zipcode))

.route(

"/privacy_policy",

get(|| async {

simple_page(

"Privacy Policy",

"This site does not collect any personal information.",

)),

)

.layer(Extension(pool));

Deployment is handled with a small CDK stack: just 9 resources describe everything including DNS records, TLS certificate, routing, and execution environment.

The Axum app runs in AWS Lambda. The database is just a file, so it gets shipped as a “layer” to be copied into the Lambda’s runtime environment. It won’t change very often, and iterating on the code with cdk watch --hotswap is extremely quick. Runtime performance is about as good as you might expect from Rust and sqlite: a few 10s of milliseconds for a cold start, and requests on a warm container are about 5ms.

const hardinessZoneFunction = new RustFunction(this, "HardinessZoneFunction", {

directory: "./usda_hardiness_zone/",

logGroup: new logs.LogGroup(this, "HardinessZoneFunctionLogGroup"),

timeout: Duration.seconds(3),

layers: [

new lambda.LayerVersion(this, "HardinessDatabaseLayer", {

code: lambda.Code.fromAsset("./usda_hardiness_zone/database.zip"),

}),

],

setupLogging: true,

reservedConcurrentExecutions: 10,

});

The above are all technologies I’d either used “for real” at work or played with extensively elsewhere. The OpenAI “Action” integration requires an OpenAPI 3.0 spec. Having been entrenched in GraphQL for the past 7 years, I barely knew what this was. But OpenAI does!

Write an OpenAPI 3 spec for this URL: https://greenthumb.jacobelder.com/hardiness_zone?q=01938

The response, with minor alterations, was enough to achieve the screenshot at the top of this page. Other GPTs were used to create the logo and even the name, “Green Thumb Guide.”

The full project is available on GitHub: jelder/green_thumb_guide